Prompt design strategies such as few shot prompting may not always produce the results you need. Use model tuning to improve a model's performance on specific tasks or help the model adhere to specific output requirements when instructions aren't sufficient and you have a set of examples that demonstrate the outputs you want.

This page provides guidance on tuning the text model behind the Gemini API text service.

How model tuning works

The goal of model tuning is to further improve the performance of the model for your specific task. Model tuning works by providing the model with a training dataset containing many examples of the task. For niche tasks, you can get significant improvements in model performance by tuning the model on a modest number of examples.

Your training data should be structured as examples with prompt inputs and expected response outputs. You can also tune models using example data directly in Google AI Studio. The goal is to teach the model to mimic the wanted behavior or task, by giving it many examples illustrating that behavior or task.

When you run a tuning job, the model learns additional parameters that help it encode the necessary information to perform the wanted task or learn the wanted behavior. These parameters can then be used at inference time. The output of the tuning job is a new model, which is effectively a combination of the newly learned parameters, and the original model.

Supported models

The following foundation models support model tuning. Only single-turn text completion is supported.

Gemini 1.0 Protext-bison-001

Workflow for model tuning

The model tuning workflow is as follows:

- Prepare your dataset.

- Import the dataset if you're using Google AI Studio.

- Start a tuning job.

After model tuning completes, the name of your tuned model is displayed. You can also select it in Google AI Studio as the model to use when creating a new prompt.

Prepare your dataset

Before you can start tuning, you need a dataset to tune the model with. For best performance, the examples in the dataset should be of high quality, diverse and representative of real inputs and outputs.

Format

The examples included in your dataset should match your expected production traffic. If your dataset contains specific formatting, keywords, instructions, or information, the production data should be formatted in the same way and contain the same instructions.

For example, if the examples in your dataset include a "question:" and a

"context:", production traffic should also be formatted to include a

"question:" and a "context:" in the same order as it appears in the dataset

examples. If you exclude the context, the model can't recognize the pattern,

even if the exact question was in an example in the dataset.

Adding a prompt or preamble to each example in your dataset can also help improve the performance of the tuned model. Note, if a prompt or preamble is included in your dataset, it should also be included in the prompt to the tuned model at inference time.

Training data size

You can tune a model with as little as 20 examples, and additional data generally improves the quality of the responses. You should target between 100 and 500 examples, depending on your application. The following table shows recommended dataset sizes for tuning a text model for various common tasks:

| Task | No. of examples in dataset |

|---|---|

| Classification | 100+ |

| Summarization | 100-500+ |

| Document search | 100+ |

Upload tuning dataset

Data is either passed inline using the API or through files uploaded in Google AI Studio.

Use the Import button to import data from a file or choose a structured prompt with examples to import as your tuning dataset.

Client library

To use the client library, provide the data file in the createTunedModel call.

File size limit is 4MB. See the

tuning quickstart with Python to get

started.

Curl

To call the REST API using Curl, provide training examples in JSON format to the

training_data argument. See the

tuning quickstart with Curl to get

started.

Advanced tuning settings

When creating a tuning job, you can specify the following advanced settings:

- Epochs - A full training pass over the entire training set such that each example has been processed once.

- Batch size - The set of examples used in one training iteration. The batch size determines the number of examples in a batch.

- Learning rate - A floating-point number that tells the algorithm how strongly to adjust the model parameters on each iteration. For example, a learning rate of 0.3 would adjust weights and biases three times more powerfully than a learning rate of 0.1. High and low learning rates have their own unique trade-offs and should be adjusted based on your use case.

- Learning rate multiplier - The rate multiplier modifies the model's original learning rate. A value of 1 uses the original learning rate of the model. Values greater than 1 increase the learning rate and values between 1 and 0 lower the learning rate.

Recommended configurations

The following table shows the recommended configurations for tuning a foundation model:

| Hyperparameter | Default value | Recommended adjustments |

|---|---|---|

| Epoch | 5 |

If the loss starts to plateau before 5 epochs, use a smaller value. If the loss is converging and doesn't seem to plateau, use a higher value. |

| Batch size | 4 | |

| Learning rate | 0.001 | Use a smaller value for smaller datasets. |

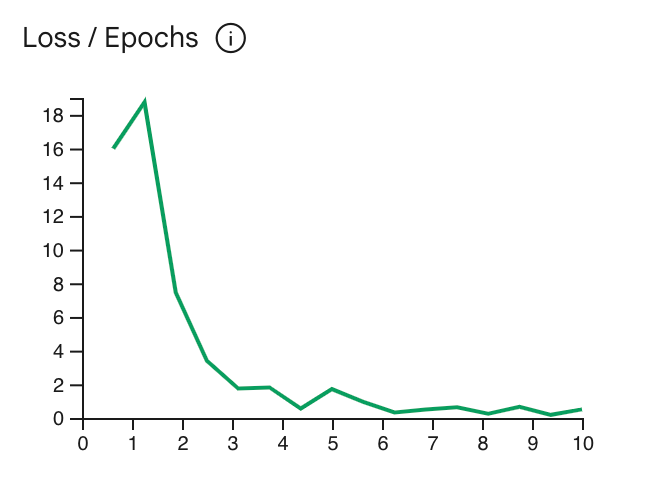

The loss curve shows how much the model's prediction deviates from the ideal predictions in the training examples after each epoch. Ideally you want to stop training at the lowest point in the curve right before it plateaus. For example, the graph below shows the loss curve plateauing at about epoch 4-6 which means you can set the Epoch parameter to 4 and still get the same performance.

Check tuning job status

You can check the status of your tuning job in Google AI Studio UI under the

My Library tab or using the metadata property of the tuned model in the

Gemini API.

Troubleshoot errors

This section includes tips on how to resolve errors you may encounter while creating your tuned model.

Authentication

Tuning using the API and client library requires user authentication. An API key

alone is not sufficient. If you see a 'PermissionDenied: 403 Request had

insufficient authentication scopes' error, you need to set up user

authentication.

To configure OAuth credentials for Python refer to the OAuth setup tutorial.

Canceled models

You can cancel a model tuning job any time before the job is finished. However, the inference performance of a canceled model is unpredictable, particularly if the tuning job is canceled early in the training. If you canceled because you want to stop the training at an earlier epoch, you should create a new tuning job and set the epoch to a lower value.

What's next

- Learn about responsible AI best practices.

- Get started with the tuning quickstart with Python or the tuning quickstart with Curl.