|

|

Run in Google Colab Run in Google Colab

|

View source on GitHub View source on GitHub

|

You can provide Gemini models with descriptions of functions. The model may ask you to call a function and send back the result to help the model handle your query.

Setup

Install the Python SDK

The Python SDK for the Gemini API is contained in the google-generativeai package. Install the dependency using pip:

pip install -U -q google-generativeai

Import packages

Import the necessary packages.

import pathlib

import textwrap

import time

import google.generativeai as genai

from IPython import display

from IPython.display import Markdown

def to_markdown(text):

text = text.replace('•', ' *')

return Markdown(textwrap.indent(text, '> ', predicate=lambda _: True))

Set up your API key

Before you can use the Gemini API, you must first obtain an API key. If you don't already have one, create a key with one click in Google AI Studio.

In Colab, add the key to the secrets manager under the "🔑" in the left panel. Give it the name API_KEY.

Once you have the API key, pass it to the SDK. You can do this in two ways:

- Put the key in the

GOOGLE_API_KEYenvironment variable (the SDK will automatically pick it up from there). - Pass the key to

genai.configure(api_key=...)

try:

# Used to securely store your API key

from google.colab import userdata

# Or use `os.getenv('API_KEY')` to fetch an environment variable.

GOOGLE_API_KEY=userdata.get('GOOGLE_API_KEY')

except ImportError:

import os

GOOGLE_API_KEY = os.environ['GOOGLE_API_KEY']

genai.configure(api_key=GOOGLE_API_KEY)

Function Basics

You can pass a list of functions to the tools argument when creating a genai.GenerativeModel.

def multiply(a:float, b:float):

"""returns a * b."""

return a*b

model = genai.GenerativeModel(model_name='gemini-1.0-pro',

tools=[multiply])

model

genai.GenerativeModel(

model_name='models/gemini-1.0-pro',

generation_config={},

safety_settings={},

tools=<google.generativeai.types.content_types.FunctionLibrary object at 0x10e73fe90>,

)

The recomended way to use function calling is through the chat interface. The main reason is that FunctionCalls fit nicely into chat's multi-turn structure.

chat = model.start_chat(enable_automatic_function_calling=True)

With automatic function calling enabled chat.send_message automatically calls your function if the model asks it to.

It appears to simply return a text response, containing the correct answer:

response = chat.send_message('I have 57 cats, each owns 44 mittens, how many mittens is that in total?')

response.text

'The total number of mittens is 2508.'

57*44

2508

If you look in the ChatSession.history you can see the sequence of events:

- You sent the question.

- The model replied with a

glm.FunctionCall. - The

genai.ChatSessionexecuted the function locally and sent the model back aglm.FunctionResponse. - The model used the function output in its answer.

for content in chat.history:

part = content.parts[0]

print(content.role, "->", type(part).to_dict(part))

print('-'*80)

user -> {'text': 'I have 57 cats, each owns 44 mittens, how many mittens is that in total?'}

--------------------------------------------------------------------------------

model -> {'function_call': {'name': 'multiply', 'args': {'a': 57.0, 'b': 44.0} } }

--------------------------------------------------------------------------------

user -> {'function_response': {'name': 'multiply', 'response': {'result': 2508.0} } }

--------------------------------------------------------------------------------

model -> {'text': 'The total number of mittens is 2508.'}

--------------------------------------------------------------------------------

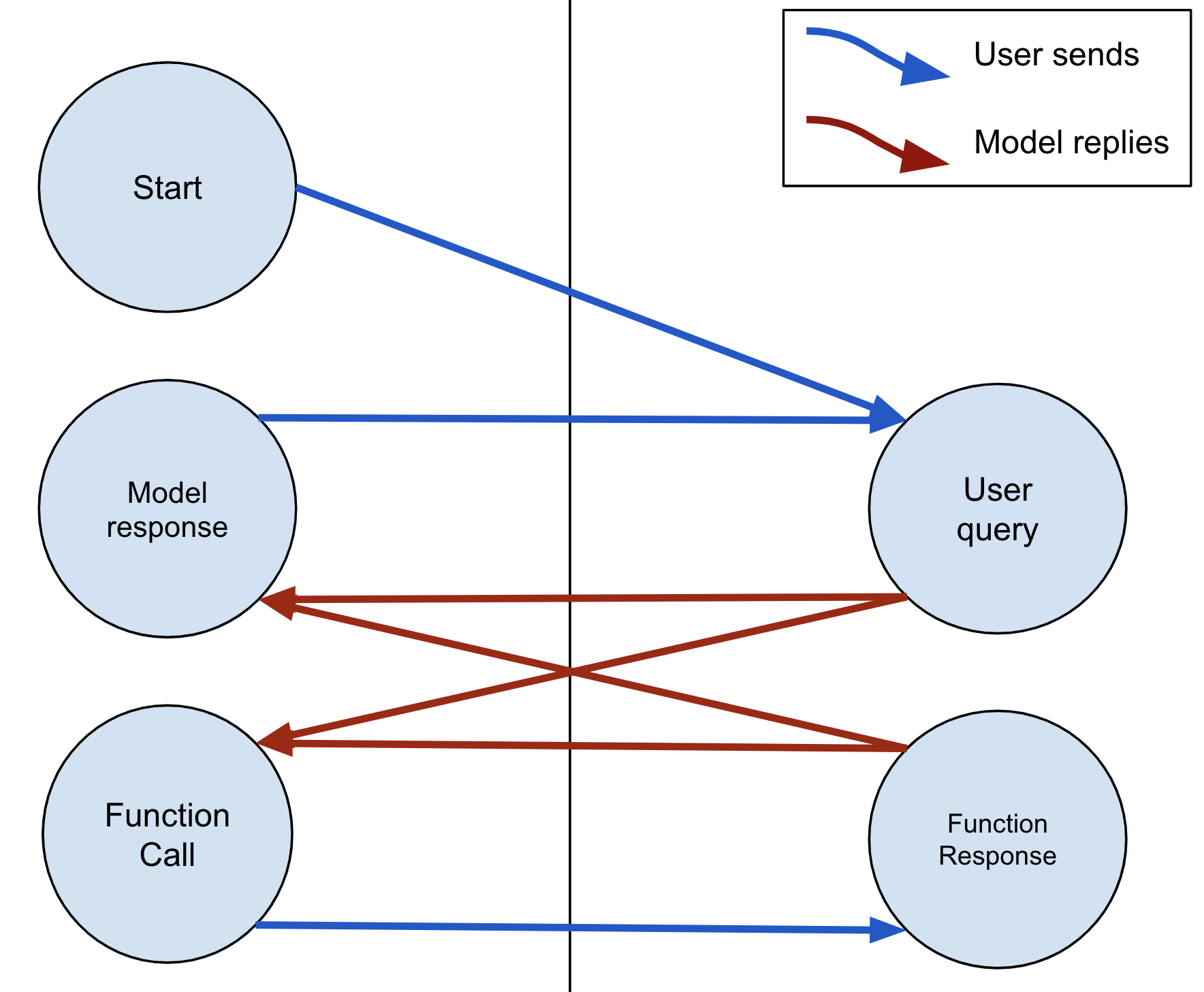

In general the state diagram is:

The model can respond with multiple function calls before returning a text response, and function calls come before the text response.

While this was all handled automatically, if you need more control, you can:

- Leave the default

enable_automatic_function_calling=Falseand process theglm.FunctionCallresponses yourself. - Or use

GenerativeModel.generate_content, where you also need to manage the chat history.

[Optional] Low level access

The automatic extraction of the schema from python functions doesn't work in all cases. For example: it doesn't handle cases where you describe the fields of a nested dictionary-object, but the API does support this. The API is able to describe any of the follwing types:

AllowedType = (int | float | bool | str | list['AllowedType'] | dict[str, AllowedType]

The google.ai.generativelanguage client library provides access to the low level types giving you full control.

import google.ai.generativelanguage as glm

First peek inside the model's _tools attribute, you can see how it describes the function(s) you passed it to the model:

def multiply(a:float, b:float):

"""returns a * b."""

return a*b

model = genai.GenerativeModel(model_name='gemini-1.0-pro',

tools=[multiply])

model._tools.to_proto()

[function_declarations {

name: "multiply"

description: "returns a * b."

parameters {

type_: OBJECT

properties {

key: "b"

value {

type_: NUMBER

}

}

properties {

key: "a"

value {

type_: NUMBER

}

}

required: "a"

required: "b"

}

}]

This returns the list of glm.Tool objects that would be sent to the API. If the printed format is not familiar, it's because these are Google protobuf classes. Each glm.Tool (1 in this case) contains a list of glm.FunctionDeclarations, which describe a function and its arguments.

Here is a declaration for the same multiply function written using the glm classes.

Note that these classes just describe the function for the API, they don't include an implementation of it. So using this doesn't work with automatic function calling, but functions don't always need an implementation.

calculator = glm.Tool(

function_declarations=[

glm.FunctionDeclaration(

name='multiply',

description="Returns the product of two numbers.",

parameters=glm.Schema(

type=glm.Type.OBJECT,

properties={

'a':glm.Schema(type=glm.Type.NUMBER),

'b':glm.Schema(type=glm.Type.NUMBER)

},

required=['a','b']

)

)

])

Equivalently, you can describe this as a JSON-compatible object:

calculator = {'function_declarations': [

{'name': 'multiply',

'description': 'Returns the product of two numbers.',

'parameters': {'type_': 'OBJECT',

'properties': {

'a': {'type_': 'NUMBER'},

'b': {'type_': 'NUMBER'} },

'required': ['a', 'b']} }]}

glm.Tool(calculator)

function_declarations {

name: "multiply"

description: "Returns the product of two numbers."

parameters {

type_: OBJECT

properties {

key: "b"

value {

type_: NUMBER

}

}

properties {

key: "a"

value {

type_: NUMBER

}

}

required: "a"

required: "b"

}

}

Either way, you pass a representation of a glm.Tool or list of tools to

model = genai.GenerativeModel('gemini-pro', tools=calculator)

chat = model.start_chat()

response = chat.send_message(

f"What's 234551 X 325552 ?",

)

Like before the model returns a glm.FunctionCall invoking the calculator's multiply function:

response.candidates

[index: 0

content {

parts {

function_call {

name: "multiply"

args {

fields {

key: "b"

value {

number_value: 325552

}

}

fields {

key: "a"

value {

number_value: 234551

}

}

}

}

}

role: "model"

}

finish_reason: STOP

]

Execute the function yourself:

fc = response.candidates[0].content.parts[0].function_call

assert fc.name == 'multiply'

result = fc.args['a'] * fc.args['b']

result

76358547152.0

Send the result to the model, to continue the conversation:

response = chat.send_message(

glm.Content(

parts=[glm.Part(

function_response = glm.FunctionResponse(

name='multiply',

response={'result': result}))]))

Summary

Basic function calling is supported in the SDK. Remember that it is easier to manage using chat-mode, because of the natural back and forth structure. You're in charge of actually calling the functions and sending results back to the model so it can produce a text-response.