|

|

Run in Google Colab Run in Google Colab

|

View source on GitHub View source on GitHub

|

In this notebook, you'll learn how to get started with the tuning service using the Python client library for the Gemini API. Here, you'll learn how to tune the text model behind the Gemini API's text generation service.

Setup

Authenticate

The Gemini API lets you tune models on your own data. Since it's your data and your tuned models this needs stricter access controls than API-Keys can provide.

Before you can run this tutorial, you'll need to setup OAuth for your project.

In Colab the easiest wat to get setup is to copy the contents of your client_secret.json file into Colab's "Secrets manager" (under the key icon in the left panel) with the secret name CLIENT_SECRET.

This gcloud command turns the client_secret.json file into credentials that can be used to authenticate with the service.

import os

if 'COLAB_RELEASE_TAG' in os.environ:

from google.colab import userdata

import pathlib

pathlib.Path('client_secret.json').write_text(userdata.get('CLIENT_SECRET'))

# Use `--no-browser` in colab

!gcloud auth application-default login --no-browser --client-id-file client_secret.json --scopes='https://www.googleapis.com/auth/cloud-platform,https://www.googleapis.com/auth/generative-language.tuning'

else:

!gcloud auth application-default login --client-id-file client_secret.json --scopes='https://www.googleapis.com/auth/cloud-platform,https://www.googleapis.com/auth/generative-language.tuning'

Install the client library

pip install -q google-generativeai

Import libraries

import google.generativeai as genai

You can check you existing tuned models with the genai.list_tuned_model method.

for i, m in zip(range(5), genai.list_tuned_models()):

print(m.name)

tunedModels/my-model-8527 tunedModels/my-model-7092 tunedModels/my-model-2778 tunedModels/my-model-1298 tunedModels/my-model-3883

Create tuned model

To create a tuned model, you need to pass your dataset to the model in the genai.create_tuned_model method. You can do this be directly defining the input and output values in the call or importing from a file into a dataframe to pass to the method.

For this example, you will tune a model to generate the next number in the sequence. For example, if the input is 1, the model should output 2. If the input is one hundred, the output should be one hundred one.

base_model = [

m for m in genai.list_models()

if "createTunedModel" in m.supported_generation_methods][0]

base_model

Model(name='models/gemini-1.0-pro-001',

base_model_id='',

version='001',

display_name='Gemini 1.0 Pro',

description=('The best model for scaling across a wide range of tasks. This is a stable '

'model that supports tuning.'),

input_token_limit=30720,

output_token_limit=2048,

supported_generation_methods=['generateContent', 'countTokens', 'createTunedModel'],

temperature=0.9,

top_p=1.0,

top_k=1)

import random

name = f'generate-num-{random.randint(0,10000)}'

operation = genai.create_tuned_model(

# You can use a tuned model here too. Set `source_model="tunedModels/..."`

source_model=base_model.name,

training_data=[

{

'text_input': '1',

'output': '2',

},{

'text_input': '3',

'output': '4',

},{

'text_input': '-3',

'output': '-2',

},{

'text_input': 'twenty two',

'output': 'twenty three',

},{

'text_input': 'two hundred',

'output': 'two hundred one',

},{

'text_input': 'ninety nine',

'output': 'one hundred',

},{

'text_input': '8',

'output': '9',

},{

'text_input': '-98',

'output': '-97',

},{

'text_input': '1,000',

'output': '1,001',

},{

'text_input': '10,100,000',

'output': '10,100,001',

},{

'text_input': 'thirteen',

'output': 'fourteen',

},{

'text_input': 'eighty',

'output': 'eighty one',

},{

'text_input': 'one',

'output': 'two',

},{

'text_input': 'three',

'output': 'four',

},{

'text_input': 'seven',

'output': 'eight',

}

],

id = name,

epoch_count = 100,

batch_size=4,

learning_rate=0.001,

)

Your tuned model is immediately added to the list of tuned models, but its status is set to "creating" while the model is tuned.

model = genai.get_tuned_model(f'tunedModels/{name}')

model

TunedModel(name='tunedModels/generate-num-2946',

source_model='models/gemini-1.0-pro-001',

base_model='models/gemini-1.0-pro-001',

display_name='',

description='',

temperature=0.9,

top_p=1.0,

top_k=1,

state=<State.CREATING: 1>,

create_time=datetime.datetime(2024, 2, 21, 20, 4, 16, 448050, tzinfo=datetime.timezone.utc),

update_time=datetime.datetime(2024, 2, 21, 20, 4, 16, 448050, tzinfo=datetime.timezone.utc),

tuning_task=TuningTask(start_time=datetime.datetime(2024, 2, 21, 20, 4, 16, 890698, tzinfo=datetime.timezone.utc),

complete_time=None,

snapshots=[],

hyperparameters=Hyperparameters(epoch_count=100,

batch_size=4,

learning_rate=0.001)))

model.state

<State.CREATING: 1>

Check tuning progress

Use metadata to check the state:

operation.metadata

total_steps: 375 tuned_model: "tunedModels/generate-num-2946"

Wait for the training to finish using operation.result(), or operation.wait_bar()

import time

for status in operation.wait_bar():

time.sleep(30)

0%| | 0/375 [00:00<?, ?it/s]

You can cancel your tuning job any time using the cancel() method. Uncomment the line below and run the code cell to cancel your job before it finishes.

# operation.cancel()

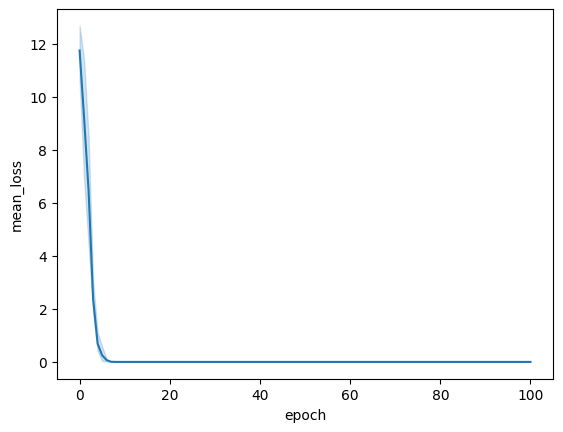

Once the tuning is complete, you can view the loss curve from the tuning results. The loss curve shows how much the model's predictions deviate from the ideal outputs.

import pandas as pd

import seaborn as sns

model = operation.result()

snapshots = pd.DataFrame(model.tuning_task.snapshots)

sns.lineplot(data=snapshots, x = 'epoch', y='mean_loss')

<Axes: xlabel='epoch', ylabel='mean_loss'>

Evaluate your model

You can use the genai.generate_text method and specify the name of your model to test your model performance.

model = genai.GenerativeModel(model_name=f'tunedModels/{name}')

result = model.generate_content('55')

result.text

'56'

result = model.generate_content('123455')

result.text

'123456'

result = model.generate_content('four')

result.text

'five'

result = model.generate_content('quatre') # French 4

result.text # French 5 is "cinq"

'cinq'

result = model.generate_content('III') # Roman numeral 3

result.text # Roman numeral 4 is IV

'IV'

result = model.generate_content('七') # Japanese 7

result.text # Japanese 8 is 八!

'八'

It really seems to have picked up the task despite the limited examples, but "next" is a simple concept, see the tuning guide for more guidance on improving performance.

Update the description

You can update the description of your tuned model any time using the genai.update_tuned_model method.

genai.update_tuned_model(f'tunedModels/{name}', {"description":"This is my model."});

model = genai.get_tuned_model(f'tunedModels/{name}')

model.description

'This is my model.'

Delete the model

You can clean up your tuned model list by deleting models you no longer need. Use the genai.delete_tuned_model method to delete a model. If you canceled any tuning jobs, you may want to delete those as their performance may be unpredictable.

genai.delete_tuned_model(f'tunedModels/{name}')

The model no longer exists:

try:

m = genai.get_tuned_model(f'tunedModels/{name}')

print(m)

except Exception as e:

print(f"{type(e)}: {e}")

<class 'google.api_core.exceptions.NotFound'>: 404 Tuned model tunedModels/generate-num-2946 does not exist.